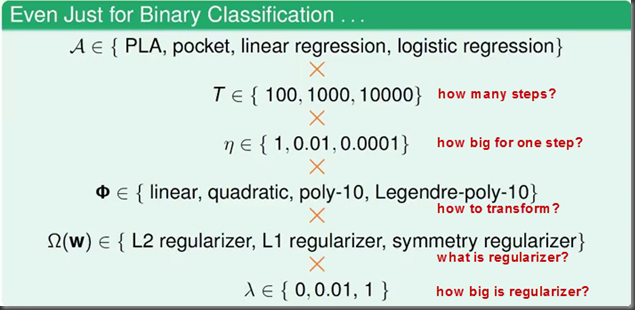

We have to try different combinations to get a good g.

No, we cannot do it.

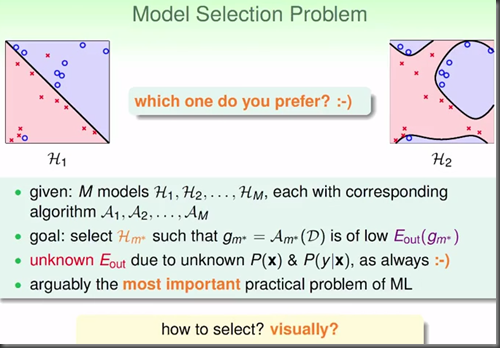

2H 2 learnings, because we will increase model complexity which is not what we want.

The problem is where can we find those testing data in advance.

Etest is infeasible, how about we reserve some Ein data (so called Eval) and those data is never used to calculate from beginning, so we can do validation later on Eval data.

D = Dtrain + Dval = training data + validation data

K = reserve size K data for validation

N-K = size of training data, use to get the best gm-

1. Learning curve, remember the picture shows more data for learning we can get smaller Eout value

2. Using Dtrain to calculate all g-, then using Dval to check which Eval is the best to become the best g-(for example, #37)

3. Why not we do it again by all data D to re-calculate g-(#37) to get the newest gm

Why? because when we use more Dval, that mean on the other hand size of Dtrain is smaller, so Eout become worse

It’s really hard to define K to meet the requirement saying it must be very small and very big at the same time.

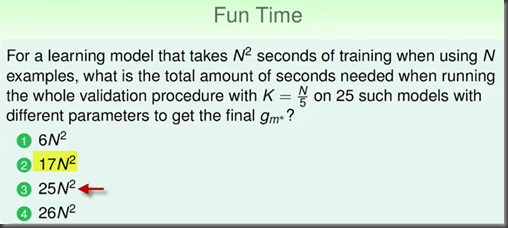

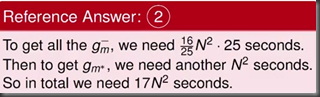

K = N/5 in practical, but someone may use N/10 or N/20 …etc

The answer is 3: 25 * (4/5N)^2 + N^2.

One interesting point here, the time consume using validation learning is faster than normal learning without validation (which is 25N^2)

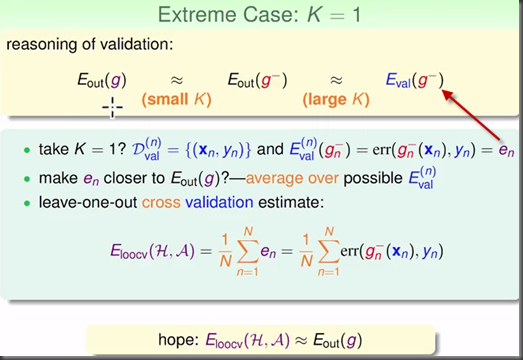

Take K = 1 as the example, means that we leave only one data for validation, en is the error of that specific validation data.

If we do a looping job for each single data (as the validation data), then sum and average them, we will call it Eloocv (leave-one-out cross validation) estimation

2 models in here, the first one is using linear model, the other one is using constant model.

By calculate both Eloocv values, we know the second one (constant) is better.

Why? e1 of model 1, the distance is the square, which is very big.

If we take 1000 as the example, Eout(N – 1) means: the error of Eloocv(1000) is the same as Eout(999), which is also equal to Eout(g-), so it is almost the same as Eout(g)

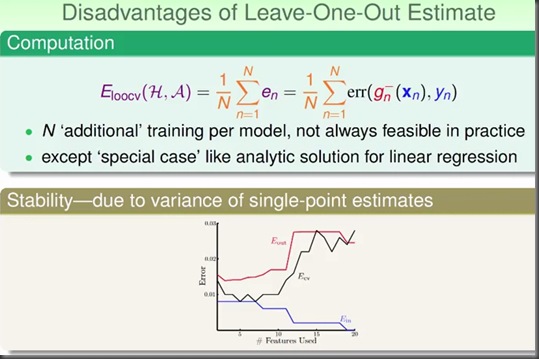

1. More features used for Ein, the result will be more good. But the curve is too complicate

2. Often Eout is small when middle features used, too many features will go into over-fitting problem

3. Leave-One-Out Cross Validation shows 5 or 7 features used can have lower Eloocv, on the other hand the curve is more simple then Ein

Constant of e1 = 6 (by 5, 7), e2 = 4 (by 1, 7), e3 = 3 (by 1, 4)

so Eloocv = (25 + 1 + 16)/3 = 42/3 = 14

If the data size is 1000, then we have to do 1000 times, which is not feasible in practice

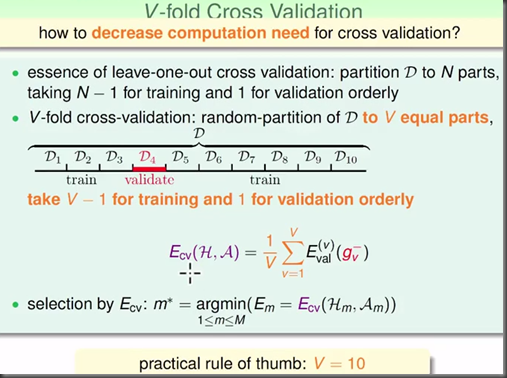

Instead of taking 1000 times, how about if we cut the data only to 10 parts (=V), so that we can decrease computation need.

沒有留言:

張貼留言